Using deep learning to classify radio galaxies

- Vesna Lukic

- Feb 12, 2021

- 3 min read

Updated: Feb 18, 2021

During my PhD, I looked at the question of how we can automatically classify images of galaxies based on their appearance. At the time, deep learning techniques were relatively new and I became aware of a work that had won the Kaggle Galaxy Zoo competition using deep neural networks.

Given that I was also working with image datasets, I thought it would be a good idea to try the approach too. In contrast to the aim of the Kaggle Galaxy Zoo competition, which was to predict how citizen scientists answered questions regarding optical galaxies, I wanted to see whether a deep learning approach could be used in discerning between different radio galaxy classes.

Radio Galaxies are radio-loud Active Galactic Nuclei (AGN), consisting of a core, jets and lobes. The jets shoot out from the core, and they have lobes at the edges. Although there are many morphologies that they can fall into, there are two main classes, known as the Fanaroff-Riley I or II types (FRI or FRII).

FRI radio galaxies have the brightest part of the radio emission closest to the core.

FRIIs have radio emission that is brightest towards the lobes of the radio galaxy.

Source of FRI and FRII figures: Judith Croston and the LOFAR Surveys team (private communication).

Convolutional neural networks, which form the basis of deep learning, stem from how neural networks work. In traditional neural networks, there is an input layer, intermediate (or hidden) layers, as well as an output layer.

The layers are made up of nodes, which are fully interconnected between adjacent layers. The network is initialised with a set of weights and biases, which govern the strength of connections between nodes.

The output of the nodes is controlled using an activation function, which produces an output signal based on some usually nonlinear combination of weights, inputs and biases.

Figure from https://towardsdatascience.com/inroduction-to-neural-networks-in-python-7e0b422e6c24

The inputs are fed into the input layer, and the predictions are given by output layer, which in the case of supervised learning (where labels are supplied along with the inputs) outputs an error based on the difference between the prediction and the label. This error is sent back through the network, and the weights and biases are adjusted in order to minimise this error. This process of adjusting the weights and biases and sending the error back through the network continues until the error is minimised. With larger sized or more complex datasets as inputs into the network, one would need to add increasingly more nodes and layers into the neural network, leading to many more parameters, which can become computationally intensive and time-consuming to train, and can result in the vanishing gradient problem, causing the network to stop learning.

Figure taken from https://freecontent.manning.com/neural-network-architectures/

This is where convolutional neural networks have come to be very useful.

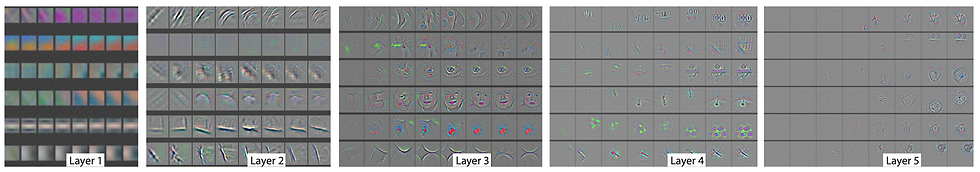

Instead of having to fully interconnect the nodes between adjacent layers, convolutional neural networks employ a set of filters, which scan across the image and detect features. Lower layers detect large scale features such as edges and blobs, whereas the later layers detect increasingly finer features. The overall effect of stacking the convolutional layers is a hierarchical extraction of features.

Figure taken from Zeiler, Fergus (2013). Visualizing and Understanding Convolutional Networks

The use of filters drastically reduces the number of parameters. Another common feature of convolutional networks is to use pooling layers.

The pooling summarises the information in a local part of the image, resulting in a further reduction of parameters. One thing to note is that the pooling operation results in some information loss.

Over the course of my PhD, I experimented with different convolutional neural network architectures that were designed to classify radio galaxy classes based on the number of components, as well as the Fanaroff-Riley class. I also compared the performance of the more recently developed capsule networks against that of convolutional networks, as well as explored how useful convolutional networks are in source-finding (locating galaxies).

An in-depth discussion of these works will follow in future posts.

Comments